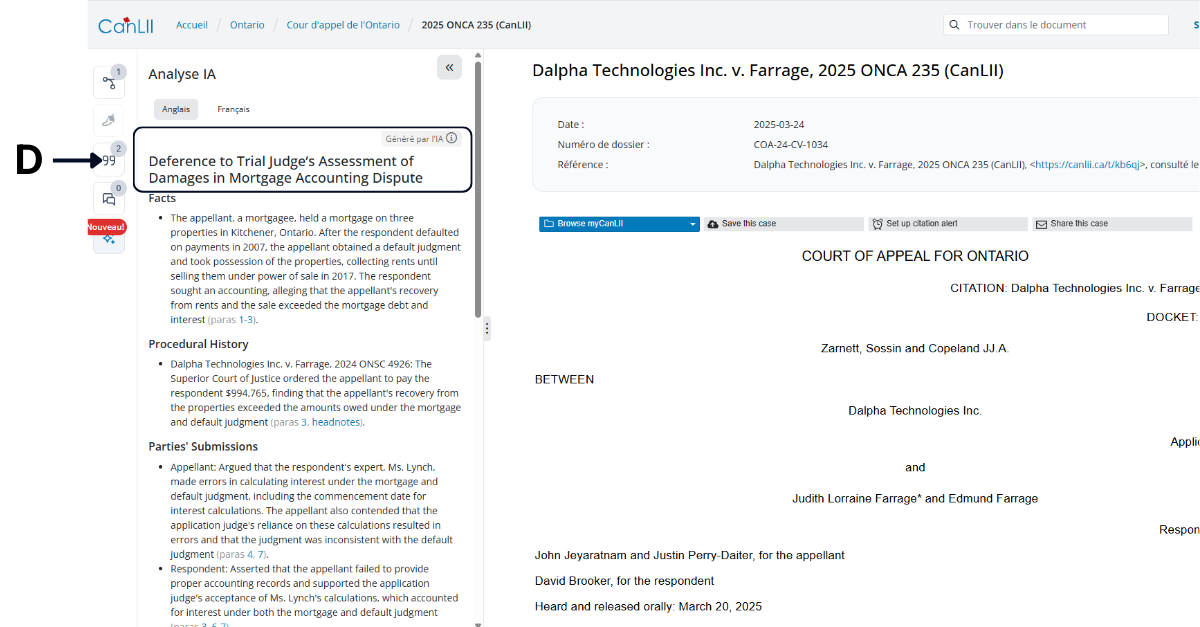

On Tuesday, March 18, CanLII rolled out a redesigned interface for its decision and legislation pages. This new look and feel isn’t just a superficial facelift – it introduces a more intuitive and dynamic approach to consulting legal information. Rather than bouncing between multiple tabs to see case treatments, cited documents, or AI analyses, researchers now get an interactive menu that conveniently keeps all added-value insights right beside the text of the decision. It’s a giant leap toward more seamless, user-friendly research experience.

One of the important factors that have been driving this change is the capacity to use AI to produce intelligence from primary legal information that has developed over the last two years. For decades commercial legal publishers have been selling intelligence produced by humans. Meanwhile, CanLII, underpinned by Lexum’s expertise, operated on an open access model based on mechanically extracting added value from legal information. But today’s more sophisticated Large Language Models (LLM) are redefining what can be automated, opening the doors for new types of contextual knowledge for legal researchers. However, as these new capabilities pile on, the user interface must be adjusted for a better browsing experience.

In 2024, we announced several projects supported by Canadian Law Foundations that added hundreds of thousands of AI-powered summaries to decisions and legislation on CanLII. Now confirmed in twelve provinces and territories (the thirteenth one currently considering it), new AI summaries will continue to be rolled out in large numbers over the months to come. Before long, a significant proportion of historical case law, all current case law and all consolidated legislation available on CanLII will benefit from these concise AI analyses. Since version 7.4, the same technology can also be made available on your organization official website or intranet via our Decisia online legal publishing platform. The State of New Mexico has summarized all its historical case law since the 1990s with this approach.

While these projects are being completed, Lexum continues to refine its use of LLMs. In addition to summaries, we are now leveraging LLMs to produce several additional types of enrichments:

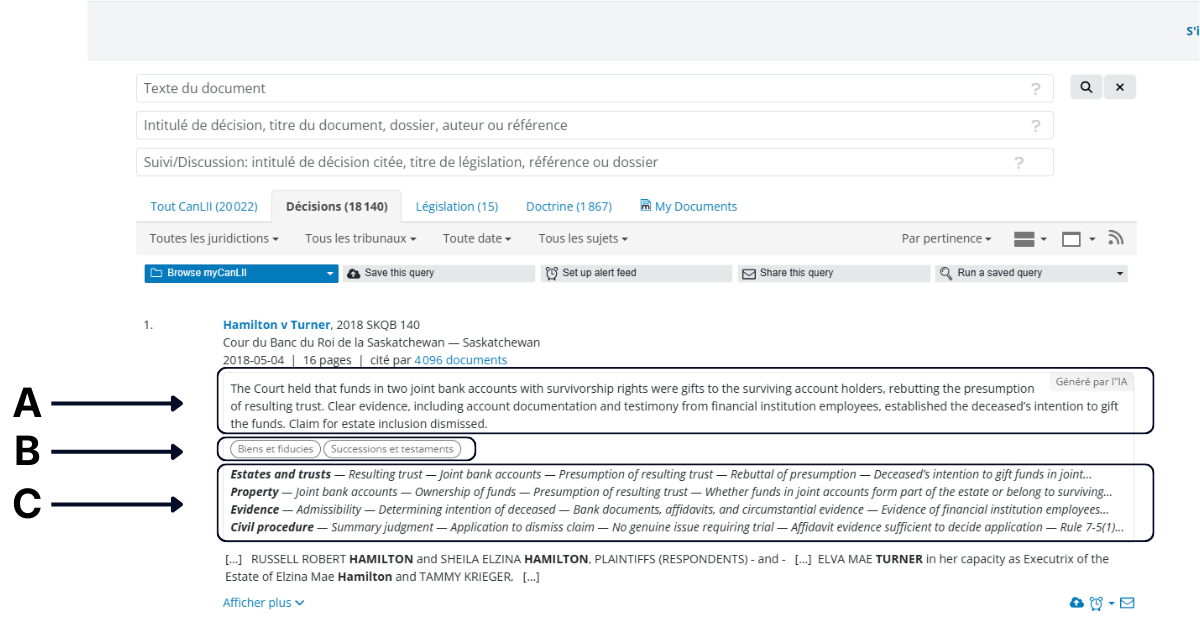

- Concise AI-generated summaries

These short, 2–3 sentence summaries appear directly into search results, offering a concise alternative to the traditional snippet-based approach. They’re designed to capture each decision’s core insight at a glance, saving users time and improving research efficiency.

- Classification by Areas of Law

Each document is categorized under one or more of CanLII’s approximately 40 predefined areas of law, enabling readers to quickly filter search results by subject. Initially powered by Lexum’s in-house AI model, the classification process is now transitioning to large language model (LLM) technology.

- Representative keywords and phrases

Alongside each area of law, the platform highlights the most significant key terms and phrases extracted from the document. These keywords offer a more granular level of indexing, identifying secondary concepts that can be useful.

- Meaningful titles

Acting as an alternative to official case names, these succinct titles appear above the AI-generated summary to capture the essence of a judicial or administrative decision at a glance.

In early March, CanLII announced the release of these new enrichments for Saskatchewan case law. Since last week, these enhancements have also been available in both official languages for more than 88,000 Ontario decisions. These first releases, still preliminary and limited, pave the way for a broader expansion planned over the course of 2025. Just as for the original AI-powered summaries, our roadmap consists in rolling them out nationwide on CanLII, as well as making them available to other organizations via Decisia where it is relevant. As we move in that direction, you can certainly expect additional design adjustments to our product line. You should also expect some exciting new search features leveraging these AI-powered enrichments… but that’s for another post!