Lexum focus has always been on using the latest technology to automate legal publishing with the goal of improving the cost/benefit ratio of accessing legal information. For this reason, we historically favoured full text search engines over classification systems based on a thesaurus. The manual labelling of judicial and administrative decisions is a labour-intensive process that has traditionally contributed to the high costs of legal publishing. Where large volumes of decisions are rendered, it also makes exhaustive publishing difficult to achieve, justifying the selection of a limited number of “reported decisions.” Lexum has always headed straight in the opposite direction.

Nevertheless, once you start publishing several hundred thousand of decisions every year and end up with databases of millions of documents, the need for some form of data categorization keeps popping up. Even if equipped with a leading full-text search engine, legal researchers end up with large sets of results for almost any query. They justifiably demand tools helping them to sort out irrelevant material, and a way to categorize case law by fields of law or subjects is the most obvious one that comes to mind. But how can this need be met without investing the efforts of reading and analyzing every single judgment?

Approximately 10 years ago, Marc-André Morissette, VP Technology at Lexum, came up with the idea of using a statistical approach to single out keywords that are used repetitively in a document while being proportionately less common in the rest of the database. On one side this approach has the benefit of being based on statistical algorithms available to the open-source community and facilitated the tagging and characterization of cases among long lists of search results. On the other side, it tends to prioritize factual keywords as the legal concepts are recurring in many similar cases. These factual keywords varying widely, they are also inadequate to power search filters by topics across the entire database.

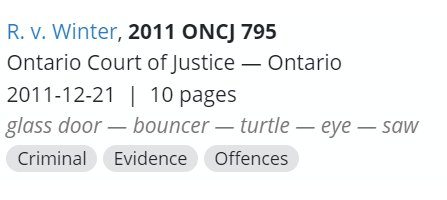

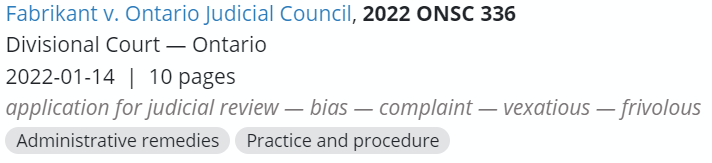

Most of the time, statistically generated keywords provide useful insights:

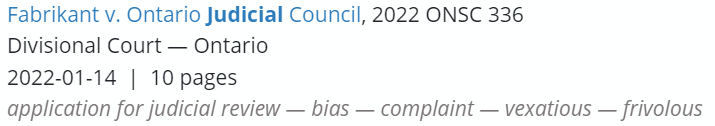

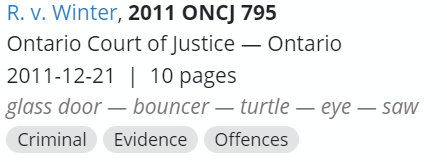

But in some cases, they constitute mystic clues giving very little indication about what is really at stake:

And this is without mentioning all the innovative slurs from testimonial quotations that kept creeping into the list of keywords. Substantial efforts had to be invested to exclude those and prevent the feature from being perceived as an insult generator…

All of this leads to the conclusion that if automated extraction of keywords from case law using a statistical approach was a positive development at the time, it also faces serious limitations. For this reason, recent developments in the field of artificial intelligence were seen by Lexum as an opportunity to revisit its technique for automatically classifying case law.

For several years now, Lexum has been investing substantial efforts in setting up an artificial intelligence (AI) capability specifically designed for the processing of legal information. This has led to several R&D projects, including one focussed on the development of a legal citation prediction algorithm. But the AI generated subject classification for Saskatchewan case law announced by CanLII in June 2021 became the first concrete results of these efforts deployed in a production environment. In January of this year, the same technique was also applied to Ontario case law.

To make these projects possible Lexum used deep learning algorithms, which are a subset of machine learning algorithms allowing a computer to “self-program” a model (a set of mathematical equations akin to computer code) to accomplish a complex task by learning from a set of examples. During the learning phase, these algorithms are provided both input data and the desired output and they slowly adjust the model’s programming to accomplish the desired task. Later, during the inference phase, this model is applied to previously unseen input to produce what is thought to be the desired output. In the current situation, the input is the text of a Canadian court decision, and the output is its associated set of subjects.

For the learning phase, Lexum processed (under permission) thousands of cases that have already been classified by the Law Society of Saskatchewan Legal Resources Library (via their digests database) and the Law Society of Ontario (via the Ontario Reports). The topics and other textual (unstructured) information present in the documents were systematically tagged so that they could be uniformly submitted to the learning algorithm. Lexum created a taxonomy of 42 subjects (or field of laws) that were subsequently mapped to the training data’s hundreds of topics. The Longformer Deep Learning Model1, which is a state-of-the-art solution in deep learning for large documents, was used to create the classification model. This model was selected after experimenting with many other deep learning models and after extensive hyperparameter optimization to maximize precision and recall. At this step, in addition to automated testing, results were examined by trained legal researchers determining if the automatically attributed subjects are adequate and correct.

The resulting AI generated subjects are currently displayed on CanLII’s search result pages, just bellow the older statistically generated keywords.

The use of a standard taxonomy has allowed the introduction of a new search filter “By subject” for the two jurisdictions supported so far. Here is an example of Ontario cases filtered with the subject indigenous peoples. In the future, we plan on adding hyperlinks to these subject labels to facilitate the refinement of search queries.

Lexum is currently progressing toward the roll out its new AI powered classification model to CanLII entire database of nearly 3 million decisions. But before reaching that point, some challenges remain to be addressed:

- Inconsistency among jurisdictions: The current model mimics the distinct editorial policies of the authors behind each dataset. Providing consistent results across jurisdictions requires further tuning.

- Legal documents are too long: Most existing pre-trained transformers are limited to document of maximum 500 words, and the one Lexum uses supports up to 4,000 words.

- No support for French language documents: The current model only works in English.

- Bias towards topics typically found in law reports: The quality of the subjects generated for cases that are not typically reported (for instance CanLII host many small claims cases) is lower.

- Lower performances in jurisdictions without training examples: When presented with cases from other jurisdictions, the classification model can only produce subjects that are appropriate for the jurisdictions it had been exposed to.

- Lack of training material: This is typical of most machine learning projects. To improve the model, additional training datasets from outside CanLII must be identified.

These challenges are not trivial, but our team has a vision for how to tackle each one of them. With the right mix of savvy choices, perseverance, and the usual bit of luck, it should eventually be possible for CanLII users to filter any search query by subject. Maybe even read a short AI generated abstract of each case, but that story is for another blog post…